PLATFORM OVERVIEW

Cortex Cognitive Engine

Genetica’s Cortex Cognitive Engine is a comprehensive AI Development and Lifecycle Management Platform. Cortex provides an intuitive framework that manages the entire lifecycle of AI models, from development, experimentation, deployment, and operationalization.

A critical aspect of the success of any AI project is the necessity of enterprise-wide confidence and adoption. Our platform is designed to support enterprise-wide collaboration throughout the entire lifecycle. This collaboration is only possible due to our intuitive and transparent (no-code) UI. This transparency ensures a greater understanding of the development process, which naturally leads to stakeholder confidence and optimal adoption of a model across the enterprise.

Cortex also incorporates best-of-breed MLOps practices to improve ML models’ speed, quality, and reliability while reducing the risk of errors and inconsistencies. Cortex enables organizations to get more value from their investment in Machine Learning projects.

ARCHITECTURE

The GeneticaAI Cortex Cognitive Engine© is a fully integrated end-to-end cloud-based AI application development and lifecycle management platform. Cortex provides a robust set of features and capabilities to develop, test, and rapidly deploy industrial-strength AI applications.

Cortex creates independent business objects that communicate with each other to form complex AI applications utilizing language-agnostic APIs. The result is an enduring unified system of computing objects that churn business data into actionable intelligence.

Cortex operates as a stateless computing node with high degree of linear scalability. Its middle tier application logic layer can be configured to run on as many computing hosts as needed and its data tier runs on the back of MongoDB scalable sharding and replication architecture.

Cortex has been designed from the ground up for high throughput fault tolerant computing environment perfect for data-intensive real-time applications that run across distributed devices.

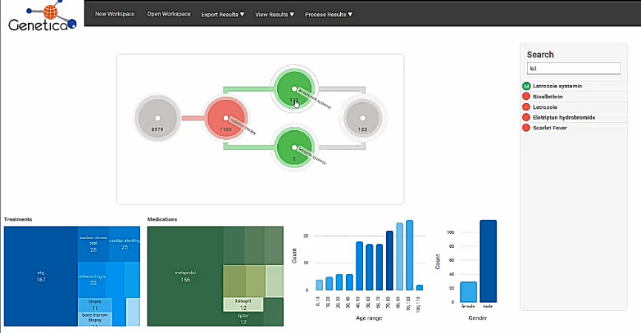

Multiple external datasets can be easily consolidated or merged simply be dragging the datasets onto the Datamart canvas and allowing our auto-linking function to link files without the need to identify and manually joint tables.

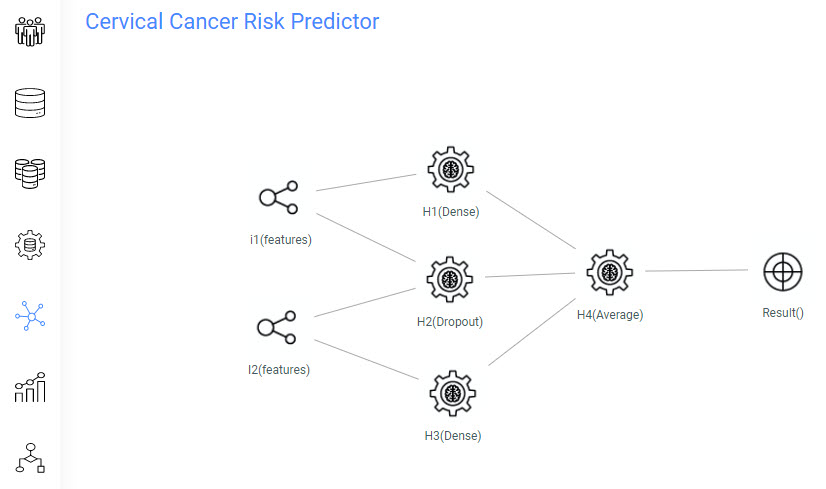

With Genetica there is no complex R or Python coding required, models are built by dragging intelligent nodes onto a canvas to identify the desired result, allow for data entry and for algorithm/function selection.

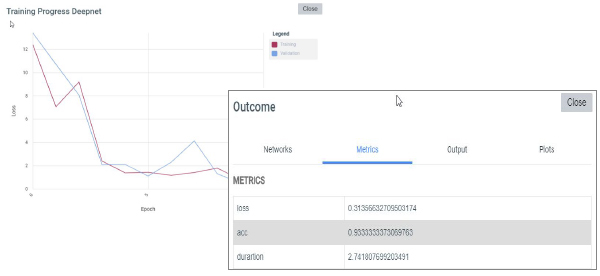

The model then automatically goes through a training and testing process and when the model obtains its optimal result the model is ready for deployment.

Cortex is a platform for generating domain-specific enterprise-class scalable mobile and web applications with built-in services for AI/machine learning, IoT, data lakes, GIS, and data mining.

Cortex supports domain experts to design, generate, deploy, and manage microservices and mobile apps that can communicate with each other and form complex applications utilizing language-agnostic APIs.

All external computing components of our platform are best-of-breed and open-sourced, ensuring the platform’s future proofing. It operates as a self-organizing computing cluster with a high degree of linear scalability. Its middle-tier application logic layer runs under a Node runtime environment. It can be configured to run on as many computing hosts as needed while maintaining and managing its “cluster state” via Kafka streaming engine. Data-tier runs on the back of MongoDB scalable sharding and replication architecture.

Instead of monoliths, Genetica’s applications are decomposed into smaller, decentralized services. These services communicate through APIs or by using asynchronous messaging (MQTT and Kafka). Applications scale horizontally, adding new instances as demand requires.

The platform’s runtime environment is built for data-intensive, real-time applications, meaning it is single-threaded, event-driven, and designed for elastic scale & automated self-management.

The application state is distributed in a self-organizing cluster of nodes. Operations are done in parallel and asynchronously. The system as a whole is resilient when failures occur. Deployments are automated and predictable. Monitoring and telemetry are built-in for gaining insight into the system.

Planning to commence a new AI Project

There are four challenges that need to be address for a successful AL project:

1. Stop looking at AI as some form of the ‘Black Arts’

It your AI team can’t explain the process in Business terms , there isn’t much chance they are going to solve business problems

2. Start with a business problem – not technology

Identify a particular business challenge first, one you can define real project outcomes for and a tangible ROI before investing in resources & Technology.

3. Ensure you have enterprise-wide buy-in

With greater transparency comes greater confidence from non-technical stakeholders, ultimately leads to greater uptake and adoption across the enterprise.

4. Data is king

ML projects are always ‘data-intensive’; this data resides in multiple systems, both internal and external to the business, and will be in different formats (relational, image, sound, etc.). You need a platform that handles the acquiring, consolidating for all data, and the elimination of any data bias – intuitively and without coding.